Stable Diffusion 3 in Daz AI Studio?

linvanchene

Posts: 1,382

linvanchene

Posts: 1,382

Stability AI released the API for Stable Diffusion 3.

I spent 10$ to purchase 1000 Credits from Stability AI to test it in ComfyUI.

Let's adress the elephant in the room:

prompt:

a photo of an elephant in a security control room,

holding a sign "SD3 for Daz AI?"

rim lighting, depth of field, cyberpunk,

###

Available information

As far as we know Daz AI Studio is based on SDXL.

Stable Diffusion 3 is an upgrade to the SD1.5 and SD2 base models.

Images can be generated at 1024x1024.

Different aspect ratios and upscaling is possible.

SD3 is advertised to be better with multi-subject prompts and spelling abilities.

From a technical point of view it should be possible to provide both SDXL and SD3 checkpoints in Daz AI Studio.

Nevertheless, training LoRA for different base models would take additional time.

###

Questions & Speculation:

Based on that three scenarios seem plausible:

A) Training with SDXL in Daz AI Studio has progressed too far to wait for SD3

B) It was known that SD3 will arrive. SDXL was used for some first tests. The actual training will continue with SD3.

C) Some LoRA will be released for SDXL. Later on there will be a switch to SD3.

###

My impressions:

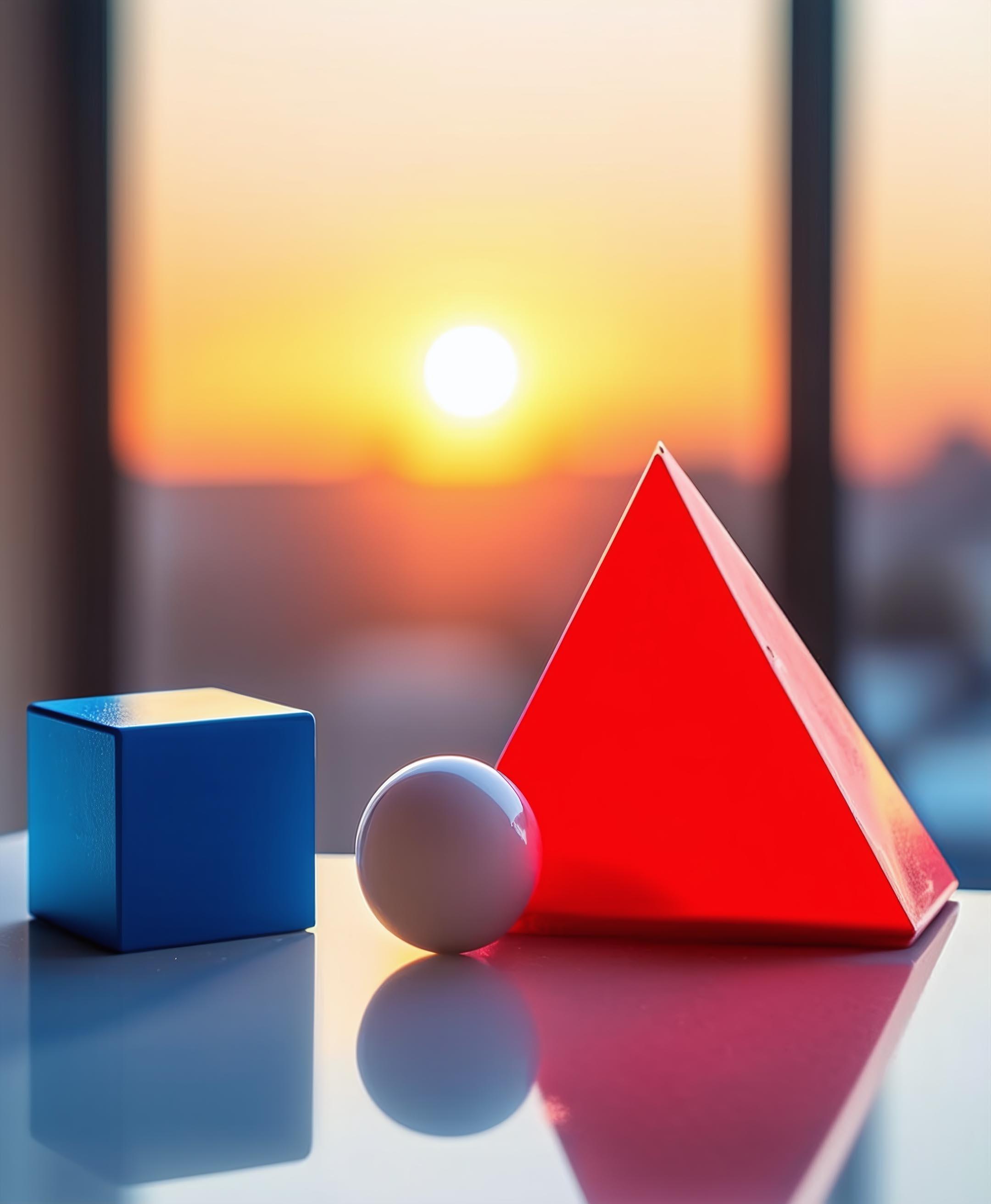

multi-subject prompts

Using prompts to describe locations of objects in combination with specific colors seems to work better.

But there are still limits on that front with more complex prompts.

prompt:

a small blue cube on the left,

a white sphere in the middle,

a large red pyramid on the right,

on a desk, office, sunrise,

###

prompt:

photo of sports cars racing,

blue sports car on the left,

white sports car in the middle,

red sports car on the right,view from behind, new york city, yellow smoke, rain, at night, back-lit, depth of field,

spelling abilities

Spelling seems to work better for short text.

With longer text you need to reroll several times to get useful results.

prompt:

a wide shot of a gothic male android with a reflective face plate and a visor,

using a flame torch to cut through a metal door, floating sparks,

interior of a space station in the orbit of a noir planet,

metal sign "Under Construction",

ominous,

back-lit, rim light, depth of field,

###

close-up view of a security monitor,

a display showing an open window "Stable Diffusion 3, API test, sd3api.py, release date, Daz AI Studio, soon?, ",

in a security control room,

at night, rim lighting, depth of field, cyberpunk,

###

Did you test Stable Diffusion 3?

Do you think a version of Stable Diffusion 3 will be available for Daz AI Studio?

Comments

Not bad.

For how many images these 1000 credits lasts?

Stability AI is working with this pricing on its developer platform:

SD3 - 6.5 credits per successful generation of a 1 MP image

SDXL- 0.2 up to 0.6 credits per successful generation of a 1MP image

For SDXL the value depends on 1024x1024 resolution and variable steps.

Source:

Attached to this post. Screenshot made on Stability AI - Developer Platform 2024-04-20.

Unknown licensing terms for public version of SD3

The public release of SDXL was under a Stability AI CreativeML Open RAIL++-M License dated July 26, 2023.

A public release of SD3 is rumored to arrive at some point but its licensing terms remain unclear.

Daz Production Inc as a partner of Stability AI may have special conditions.

Ok, thank you very much for the information.

:According to a Twitter post last month, Stability AI's CTO and interim CEO wrote that the public release will be open-source:

https://twitter.com/thibaudz/status/1772204495167983909

At ComputexTaipei Co-CEO @chrlaf officially announced the open release date of Stable Diffusion 3 Medium for June 12th.

source: Stability AI post on X

Is it possible to share some information if an SD3 version will be available for Daz AI Studio?

Having the latest version of Stable Diffusion might be nice, but I think most users would prefer to have more styles to choose from in the current version of SD run by/for Daz, such as the two sample images below, which were made in Stable Diffusion SDXL 1.0, running locally on my laptop at home (a relatively cheap gaming laptop with an Nvidia Geforce 3060 GPU with 6 GB VRAM, that has never been used for gaming, only for Daz 3D Studio, and now also AI image creation...).

True, but I think most users here at DAZ could care less about AI image generation and/or styles since they can do that anywhere, with any AI app. Using AI to generate images for most users is the same as a DS user loading "everything" from a preset and hitting render. DAZ needs to find ways to allow AI to interact with DS and the setup/render process more than in just generating images. If anything, I feel AI is the best postwork tool

Trying not to go to much off topic here, since the idea of this thread was to focus on news related to Stable Diffusion 3.

But an interesting point was raised:

A style could be provided by

Based on previous advertiments sent out by Daz one idea seems to be to provide LoRA for style:

In this example the "Candle Light" would be a lighting style provided by a LoRA.

The LoRA modifies the results of the checkpoint to alter the generated image with the help of trained triggers.

In theory you could train LoRA with toon or anime styles as shown in the example images by tom.rowlandson.1727.

The question is:

How well do different LoRA work together?

While working with offline local UI solutions I limit myself to three different active LoRA.

In some cases LoRA can work together very well if they influence different areas.

In other cases their styles clash with each other based on how they were trained.

There are conflicts because the same tags or trigger words are used etc...

Some people have great skill at training LoRA so it only adds the one element that is needed without influencing anything else.

My guess is that behind the scenes there is currently a lot of learning and testing going on what combinations work and which not...

Checkpoints can provide styles

The current checkpoint available for Daz AI Studio generates images that fall in between 3D and stylized photo retouching.

To create a more toon like look a different checkpoint could be trained with toon renders...

Ultimately, the styles we can get with Daz AI Studio depends on the images Daz has available to train checkpoints and LoRA.

In theory, Daz could make use of this community to provide images rendered in different engines with specific shaders.

Edited: Split into two different posts to keep the topics separate if needed...

What are currently popular SD base models in the open-source community?

My current impressions are:

New base models with more parameters provide better control

The idea is to use checkpoints and LoRA to further train base models in specific areas where they lack.

In regular intervals it makes sense to release an updated version of a base model that is further improved to better understand directions and more detailed concepts.

Example - Understanding complex prompts

SDXL 1:

Stable Diffusion 3:

A huge limitation of SDXL is that colors, clothing and character features get mixed up all over the image.

The Stable Diffusion 3 image was generated on second try.

So I did not have to reroll xy times until I got lucky...

In theory Daz AI Studio could provide an SD 3 checkpoint and get even more control combined with LoRA.

Daz AI Studio is the user interface.

That user interface could be used to provide many different checkpoints - each offering different types of LoRA and styles...