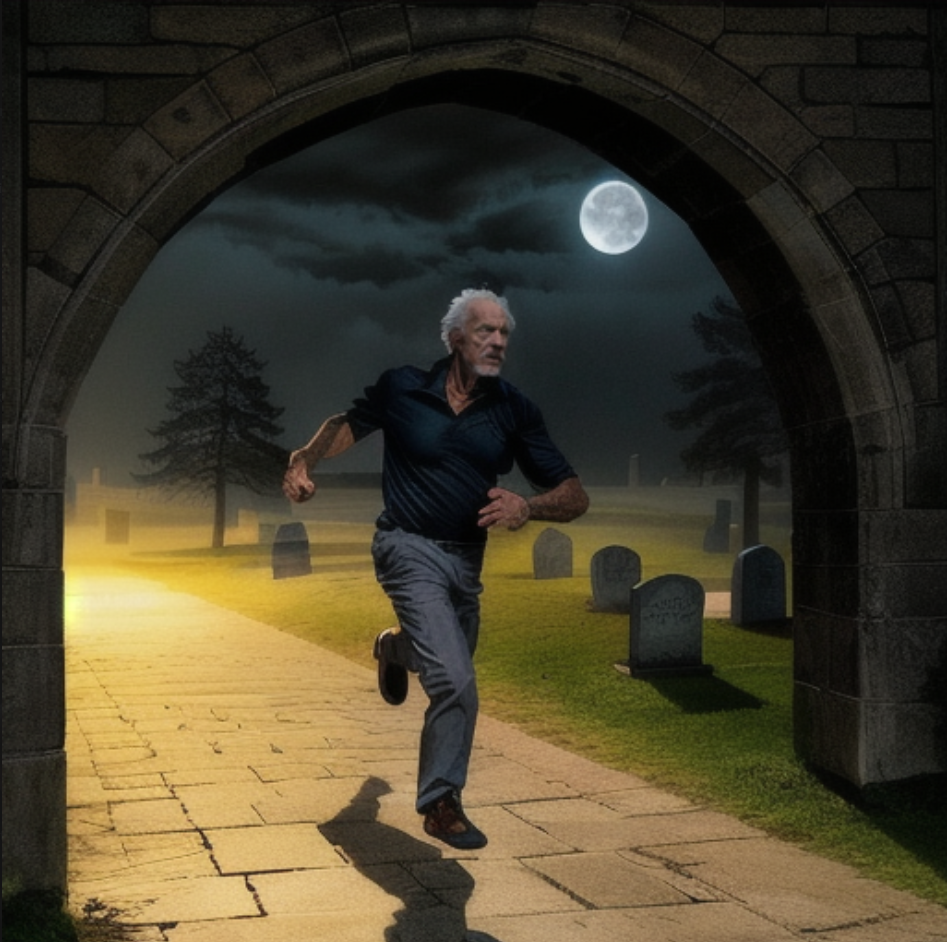

P4 Dork is out of the Graveyard - Carrara to SD workflow - warning AI discussion

Have been experimenting with Stable Diffusion. Carrara is ideal for creating quick simple starting images for Stable Diffusion's image2image processor. Here, I reached back into the depths of my content libraries and pulled out 'Dork,' also known as the Poser 4 man. Quickly and easily blocked out a scene using a variety of Carrara tools. 10 minutes, max. I used the spline modeler for the arch. The path and grass are primitive planes. The trees are default carrara plant editor trees. The moon is a primitive cylinder. The gravestones are vertex grids with added thickness, and duplicated. I inserted a spotlight by the 'moon' to get the shadows. That is it.

Here is the raw render.

Then I took the raw render and put it in SD's image2image processor. I used simple prompts for a man running out of a graveyard under an arch at night with a full moon. And the result is not just a random image, but one with the details that I wanted. That was just one pass. An actual workflow would be to iteratively improve the elements of the image with masking specific sections of the image, more prompts, etc. Dork is back!

Comments

I am putting this post here instead of in the Art Forum because I am emphasizing how simple this particular Carrara scene is, and how easily Carrara can edit all types of 'block out' elements.

Particularity!

One could easily put in the prompts without the Carrara render and get a different scene, a 'better' scene, out of Stable Diffusion or Midjourney or Dall-E or whatever. That is not the point here. The point here is that you can get this particular scene and setting. This particular graveyard, this particular path, this particular placement of the moon, etc. There are other ways to accomplish the same end, but hopefully one can see how quickly and easily Carrara can be used in this way. I am emphasizing the editing tools, one example being the spline modeler to make the arch.

"That must be wonderful, I don't understand it at all" (Moliere) But the final product is impressive.

That turned out Fantastic!!! I must say, however... I didn't recall P4 Man looking so good in a raw render. I remember him as looking pretty bad. Must've been that I was so new to 3d back then. Wow. SD really made him look Great!!!

It probably won't be too long before they figure out how to do hands, paws, etc., better.

I like content like this. I do play a little SD myself. Very cool.

I haven't used Stable Diffusion so wouldn't know where to start.. this means you @Diomede will have to start a thread tutorial from 'Carrara to Stable Diffusion for dummies'

but wow.... Dork came out well

Might start such a thread, although I think my current interest in Stable Diffusion will be brief.

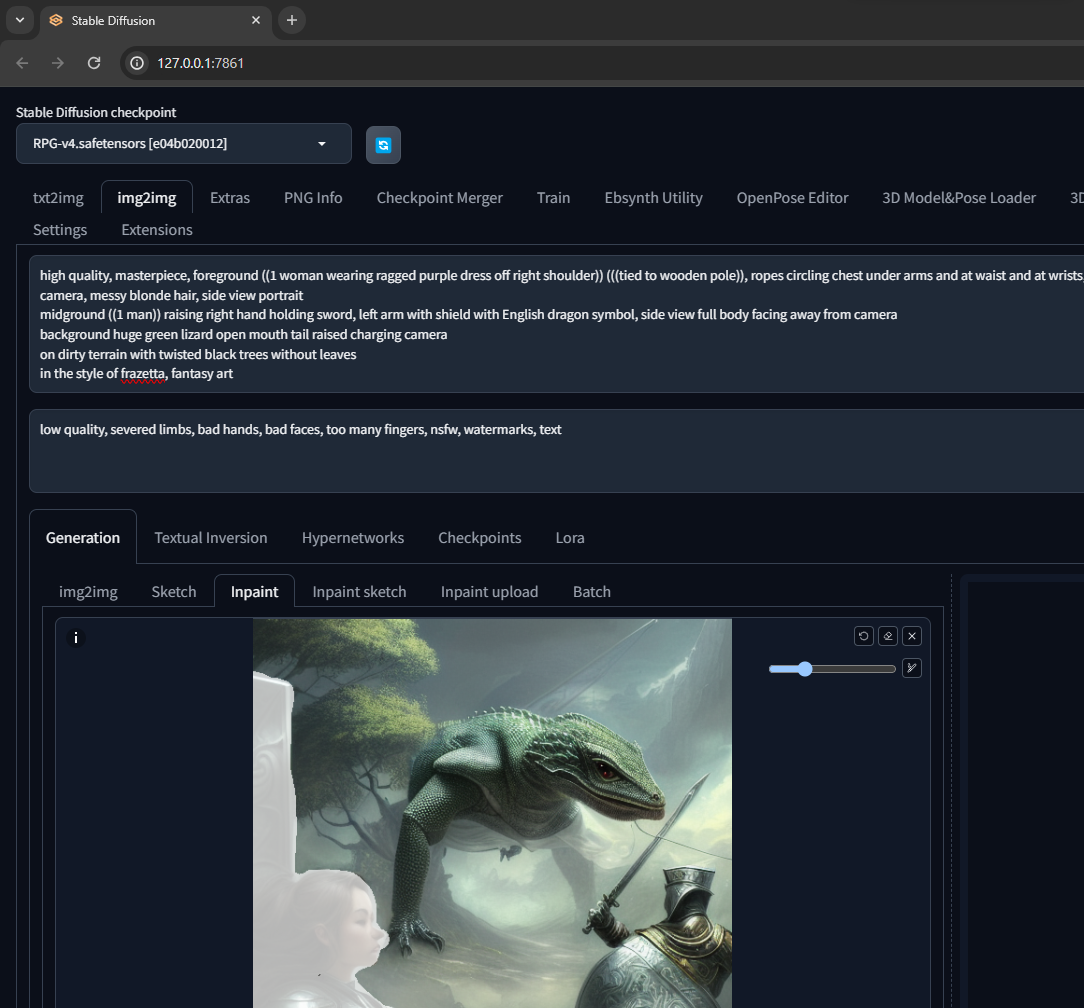

One more illustration. Posing, composition, and similar factors drive my current interest in a 3D-to-Stable Diffusion workflow. A plugin called Controlnet is key. I will use the Poser 4 Dork Businessman and the Controlnet functions for 'Canny' and 'OpenPose' to demonstrate.

Oversimplified summary. Stable Diffusion starts with noisy pixels, just random spots, and then uses fancy math to converge on an image defined by a set of words or another image. A dataset correlates the words with elements of other images which have been tagged with the words. Describe in words what you want the image to be, and the program uses the labels/tags in the dataset/model to converge the noisy image to what correlates to the words you listed. You can also use a second image as a prompt instead of words. Controlnet cn help with that. Controlnet has functions which can take elements from one image and use them as guideposts for a second image. The 'canny' function identifies the edges in one image. The 'open pose' function identifies the angles of human limbs in one image. So, a 3D program like Carrara or Daz Studio or Blender or Poser or... can be used to quickly specify a pose, or arrange a composition, or... in one image to be used to influence a second image. Here, I am going to use the ancient Poser 4 Dork businessman in Controlnet to direct Stable Diffusion on how to interpret the verbal prompts. Clear as mud?

A - I want a man in a suit sitting in an office, and I want to see the whole man, not just from thighs up.

a1. Stable Diffusion with No Help from Carrara - old man in suit

I list a bunch of positive prompts in the first big box, such as detective sitting in office, gray hair, etc. And the second big box has negative prompts, such as low quality, bad anatomy, etc. I set it to give me 4 choices, seen in the bottom right of the attached screengrab. In the attached screengrab, see how the four choices have a variety of poses but I want something specific.

a2. A Carrara render of P4 Dork Businessman in sitting pose.

a3. Place Carrara render of sitting businessman in Controlnet area of Stable Diffusion, check 'enable,' choose the function (canny in this case), and click the fireball looking thing to preprocess. A render with black background and white lines appears next to the Controlnet imported Carrara render. Use stable diffusion to generate again with the same prompts as (a1). See how the resulting image has a man matching the same pose as the Canny white lines.

a4. Repeat the same process with the 'openpose' function. See how the white outline of canny has been replaced by a multi-colored stick figure matching the sitting pose of the Carrara render. Running Stable Diffusion with the same verbal prompts again gives a result of a man in a suit in the correct pose. So either function might work depending on the situation.

a5 Repeat but instead of sitting,make the old man stand and see the full figure, not just thighs up. I have a second render of the P4 Dork businessman.

a6. Here is the sitting pose render example again, but this time instead of gray hair, the verbal prompts specify long blonde hair. The background description is changed to a bench in the park.

.

Bottom line - in my view, 3D programs and 3D skills can be partnered with Stable Diffusion, especially with Controlnet functions like Canny and OpenPose.

I am doing this a lot, but gasp, using DAZ studio with Filament , so cannot share here

, so cannot share here

there is a thread in Art Studio I do though so no problems https://www.daz3d.com/forums/discussion/591121/remixing-your-art-with-ai#latest

https://www.daz3d.com/forums/discussion/591121/remixing-your-art-with-ai#latest

this video sort of can be shared here as a Carrara Howie Farkes Tea Garden video was used in Stable Diffusion Deforum for the background

Bunny is DAZ studio iray however

Looking good. I still am not animating in Stable diffusion. The animaiton related extensions have conflicts somehow for my install. Can't identify the source.

Yes, absolutely amazing. This is what I think about when someone says, "remixing your art". Very impressive.

Thanks, Walt. Always more to learn.

Another Example. Here is the Stable Diffusion AI alteration of a Carrara render.

Here is the original Carrara render using the Poser 7 Simon and Sydney models. I also rendered out a Depth pass, which I used in the Controlnet extension for Stable Diffusion.

The Carrara scene and render took about 15 minutes to generate my starter scene. I was playing around for a couple hours nefore I settled on the Stable Diffusion image. I had decent alternatives within 20 minutes of getting started but it can be fun to play around with different settings. I ended up using two of Controlnet's extensions at the same time, one is the Carrara depth pass. and the other was 'canny.' to find the outlines. And it is possible to mask out only part of the image within Stable Diffusion for further manipulation. Here is an example of a image I ultimately rejected.

.

One more. Fifteen minutes for a quick Cary Grant North by Northwest highlight. Poser 4 Dork Businessman and the Wright brothers plane from the Carrara content browser.

Poser 4 / Carrara 5 level scene.

Run through Stable Diffusion Controlnet 'canny' adjustment one time with simple Cary Grant running toward camera with biplane over shouder in a farm field as the prompt., no other adjustments.

I am curious how Brash and Moxie would look realistic now

Don't tell anyone, but I have been reviewing how to make a LoRa for each.

I don't know enough to have any idea if it is better to make a LoRa or do embedding. I've only tried one embedding that I downloaded. The effect was too strong for my taste but given my beginner level of skill the issue could easily be that I did not understand how to control it.

King Kong climbing the Eifel Tower.

Limiting myself to using verbal prompts, I had a very hard time generating King Kong doing the Paris thing. I could get very good renders that included both Kong and the tower - sitting on a ledge of the tower, standing next to the tower, etc, etc, etc. I even tried putting the word climbing in ((())) triple parantheses! I attach an example 4 images of the kind of results I got from merely using verbal prompts in Stable Diffusion. They are really good images of Kong, just not what I am specifically looking for.

Ghoulish Objects from the Carrara Native Content Browser Graveyard

There is a very rudimentary gorilla model in the native content browser. I rigged it and posed it in a climbing position. There is also a simple Eifel Tower model. I stuck it on a Carrara terrain with a plateau filter. I placed the Gorilla on the side of the tower.

This time, I started with the Carrara render posted above. This time, I used the standard render in Controlnet using the 'canny' function, not a depth pass.

The result

you are really getting the hang of this

in a year we will be watching Tom and Jerry cartoons AI filtered with real fur etc

already possible for single frames

I imagine video games, old films will all get the AI treatment

Thanks Wendy. You are correct about the likely application to old films and similar. Under American law and most other places by treaty, films before 1928 are now public domain. Someone could AI process the silent works of Buster Keaton and Charlie Chaplin, for example.

Brash and Moxie?

As an experiment, I tried a simple scene with my custom Brash and Moxie figures. I loaded Brash 2 and Moxie 3 with their formal uniforms in Carrara. Composed props and set using simple primitives. Rendered out a base photoreal render and a depth pass. Placed the Photoreal render in the 'canny' channel, the depth pass in the depth channel, reduced each channel to 0.5, and prompted Stable Diffusion to generate an image in 1940s comic style, but put anime among the negative prompts.

Base Render

Result

Here is the result of using the same verbal prompt and the same image prompts, but choosing a different model.