Uberevrioment: HDR: I don't understand what the default images are.

I'm trying to learn about Uberenvrioment.

I loaded one..I selected "Set HDR KHPark".

There's nothing else in the scene.

I render, it's a blank window.

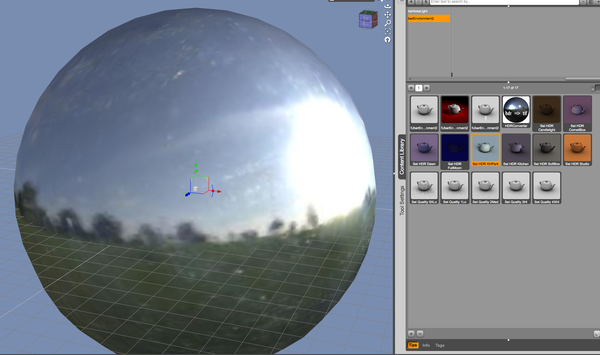

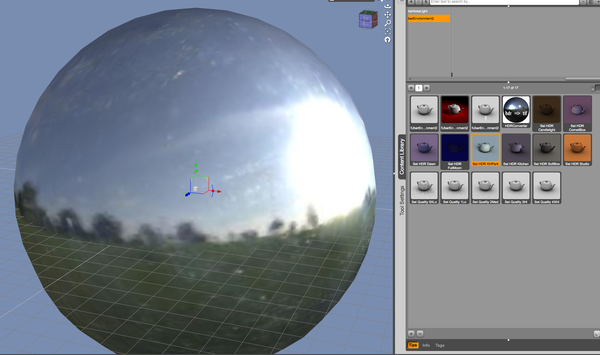

I zoom out so I can see the Uberenvrioment sphere...I see the sphere with a park image on it (screen shot) I render...nothing.

I clearly completely misunderstand what is going on here.

Shouldn't that park be a background or something?

Screenshot_2014-11-27_23.40_.17_.png

1958 x 1157 - 990K

Comments

The HDR files are for lighting and like most lights in 3D you don't normally want to see the light source.

The sphere that the HDR is mapped to is set as 'non-rendering, visible only in preview but still light emitting' by default.

To see the lighting effect you have to have some objects in the scene to get lit and make shadows etc.

HDRs are different to other lights as they contain a 360 environment, to see that you select the sphere and in the parameters tab you can change it to 'visible in render'

I guess I don't understand what the images do then. some of the videos I've talked about with the park above rotate the sphere to move where the "sun light" points...but what does the grass and trees and clouds do?

Hi.

There is a technique used in 3D rendering referred to as Image Based Lighting. You'll often see this referred to as simply "IBL". In the real world objects are lit by both direct and indirect light. Outside, the direct lighting comes from the sun, or, at night, from the moon and stars. (although technically, light from the moon is indirect, since it is sunlight reflected off the moon). Indirect lighting comes from light reflected off other objects, such as clouds, the ground or buildings, etc.

In order to create realism in lighting our virtual scenes, IBL lighting is used in order to simulate real world lighting by using images of real world scenes. Any image can be used. It can be a plane (flat), or a hemisphere, or a box (for example if you wanted an indoor scene with the sides of the box representing walls, floor and ceiling). These things are not objects that are visible in the scene because they are only used to provide the lighting for the scene. Think of them as transparent filters through which the lighting is projected. The only time you would actually see the images themselves would be if you had objects in your scene that reflected light - like a mirror. In the latter case you would actual see a refection of the image in the mirror.

Backgrounds are different in that they don't emit light, they can only reflect it. You could use an IBL image as a background if you wanted to, but you would need to set it up that way. IBL images aren't really intended for that, and you are probably better off just getting similar background images designed for that purpose, whether planes or world balls, etc.

HDRs are used as reflections as you say.

I was just explaining why its not switched on by default:

You can see the trees in the reflections:

So, in the park image, for example...the trees would "project" light bouncing off trees into the image?

Imaged based lightning's primary function is not as a background or even for reflections. In some cases you can use them as background or reflections but that shouldn't be a given. In those cases the image on the sphere is has had special attention given to it so that it will render properly as a backdrop. Reflections are more flexible because having a blur in them is some times preferable to sharp images.

Imaged based lighting "paints" the lights colors on the figures in the scene. If you load an all white figure in the scene and render you should be able to tell what the colors from the light are. I think the core idea is that the light images are pulled in such a way that they realistically represent the light in the space where the render is being done. In other words if your doing a render of a dinning room light by candle light then the images used would be of a similar or identical space light with candle light. That isn't always practical. IBL can also be used to supplement or replace special lights because it can do a good job effecting the color of the figures in the scene.

So it's kind of like a stained glassed window

Sort of. But a window only casts light on the angle it is shining on while these lights will cast color and light on every angle of the figure.

Another thing to be aware of is the use of the term HDR. This is not synonymous with IBL, although often IBL may use HDR. HDR, or HDRI, refers to High Dynamic Range imagery. This consists of multiple photographic images taken of the same thing with different camera settings. The resulting images are then composited (placed one over top of another and combined) to produce an image that has attributes of all those used. This can result in some stunning images, although some might say surreal.

There is a third technique at play here represented by that sphere you see earlier in your post. Often IBL will use "surround" shapes, like that sphere. In that case it resembles a mirrored ball because one method of producing such panoramic images is to actually take a large mirrored ball, place it on the ground and take pictures of it. Another technique is to use a panoramic camera tripod mount and take pictures from numerous angles, then stitch them together.

The distinction of HDR images (and the important thing with respect to IBL) is not the multiple-exposure business; that's just a methodology for creating them. The big deal is that high dynamic range. Instead of light values ranging from 0 to 65536 (for a 16-bit color value), the values are represented as floating-point numbers and can have a range of many orders of magnitude. This means that part of the image can be as bright as, oh, say, the sun, while other parts are very dark. So, a very realistic lighting source.

The stunning/surreal images you find online are the result of having to map HDR images back into ordinary JPEG-type images that can be seen on an ordinary monitor (or, incidentally, put on a skydome as a reference image). This process is called 'tonemapping' and there are lots of ways to do it,resulting in very different styles of pictures.

Conversely, I believe (someone check me) that LuxRender can output its renders as HDR images, so you can have both the sun and shadow in the frame, and it will dutifully record the whole mess. Again, tonemapping must occur to see the result on an ordinary screen.

So it knows what light to project based on the colors/brightness of the image. That opened an explanation to me....how these work on a technical side.